I’ve spent last couple of days playing with Azure Machine Learning (AML) to find out what I can do with it. To do so I came up with an idea that might or might not be useful for our recruitment team. I wanted to predict whether a given candidate would be hired by us or not. I pulled historical data and applied a few statistical models to it. The end result was not as good as I expected it to be. I guess this was caused by the fact that my initial dataset was too small.

How to start

I’ve done some ML and AI courses as part of my university degree but that was ages ago :) and I needed to refresh my knowledge. Initially I wanted to learn about algorithms so I started reading Doing Data Science but it was a bit too dry and I needed something that would be focused more on doing than theory. Recently released Microsoft Azure Essentials: Azure Machine Learning was exactly what I was looking for. The book is easy to read and explains step by step how to use AML.

Gathering initial data is hard

For some reason I thought that once I have data extracted from the source system it should be easy to use it. Unfortunately this was not true as the data was inconsistent and incomplete. I spent significant amount of time filling the gaps and sometimes even removing data when it did not make sense in the context of the problem I was trying to solve. At the end of the whole process it turned out that I removed 85% of my original dataset. This made me question whether I should be even using it. But this was a learning exercise and nobody was going to make any decisions based on it so I didn’t give up.

Data cleansing was a mundane task but at the end of it I had a much better understanding of the data itself and relationship between its attributes. It’s a nice and I would say important side effect of the whole process. Looking at data in its tabular form doesn’t tell the full story so as someone mentioned on the Internet it is important to visualize it.

AML Studio solves this problem very well. Every dataset can be quickly visualized and its basic statistical characteristics (histogram, mean, median, min, max, standard deviations) are displayed next to the charts. I found this feature very useful. It helped me spot a few mistakes in my data cleansing code.

Trying to predict the future

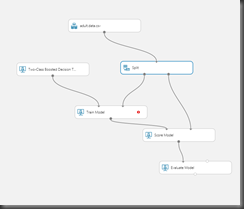

Once the data has been cleaned I opened AML Studio which is an online tool that lets you design ML experiments. It took me only a couple of minutes to train a Two-Class Boosted Decision Tree model. To do it in AML Studio you need to take the following steps:

- Import data

- Split data into two sets: training set and test set

- Pick the model/algorithm

- Train the model using the training set

- Score the trained model using the test set

- Evaluated how well the the model has been trained.

Each of the steps is represented by a separate visual element on the design surface which makes it very easy to create and evaluate experiments.

I tried a few more algorithms but none of them gave me better results. This part was more time consuming than it should be. Unfortunately at the moment there is no API that would allow me to automate the processes of creating and running experiments. Somebody already mentioned that as a possible improvement so there is a chance it will be added in the future: http://feedback.azure.com/forums/257792-machine-learning/suggestions/6179714-expose-api-for-model-building-publishing-automat. It would be great to automatically evaluate several models with different parameters and then pick the one that gives best predictions.

But it is Drag & Drop based programming!

In most cases Drag & Drop based programming demos well, sells well but has serious limitations when it comes to its extensibility and maintainability. “Code” written in this way is hard or impossible to test and offers poor or no ability to reuse logic so “copy and paste” approach tends to be used liberally. At first glance AML seems to belong to this category but after taking a closer look we can see that there is light at the end of the tunnel.

Each AML experiment can be exposed as an independently scalable HTTP endpoint with very well defined input and output. This means that each of them can be easily tested in isolation. This solves the first problem. The second problem can be solved by keeping the orchestration logic outside of AML (e.g. C# service) and treating each AML endpoint as a pure function. It this way we let AML do what it is good at without introducing unnecessary complexity of implementing “ifs” visually.

I’ve trained the perfect model, now what ?

As I mentioned in the pervious paragraph, the trained mode can be exposed as a HTTP endpoint. On top of that AML creates an Excel file that is a very simple client build on top of the HTTP endpoint. As you type parameters the section with predicted values get refreshed. And if you want to share your awesome prediction engine with the rest of the world you can always upload it to AML Gallery. One of the available APIs there is a service that calculates person age based on their photo.